Trustworthy Messages

This is the third post evolving around the trust issues facing most facets of using the services offered by the cloud. I've questioned the need for trusting content and I've sketched a decentralized authentication protocol. Now we must turn our attention to the real reason for jumping through all these hoops: the content – the message.

I guess most of you have played the game that goes a little like this: get lined up in a row with 4-7 people in each row, and have the first person in each row have a pen and a piece of paper, and let each of the rest of the people in each row stand close enough to their peer in front of them to reach their back. Now draw a simple figure on the back of the person at the back of each row, and have each person draw this figure on the back of the person in front of them, until the figure reaches the person in the front of each row. Finally have the first-in-row people draw the figure on paper. Chances are that 1 in every 10 rows get it fairly right.

Quite an introduction to signal/noise in messages, right? That's even without introducing any external sources like lack of light, high volume playing of music, more.

Keeping messages safe traveling from the sender to the receiver is quite a feat, hence any message should be verified against a hash calculated just as it leaves the sender.

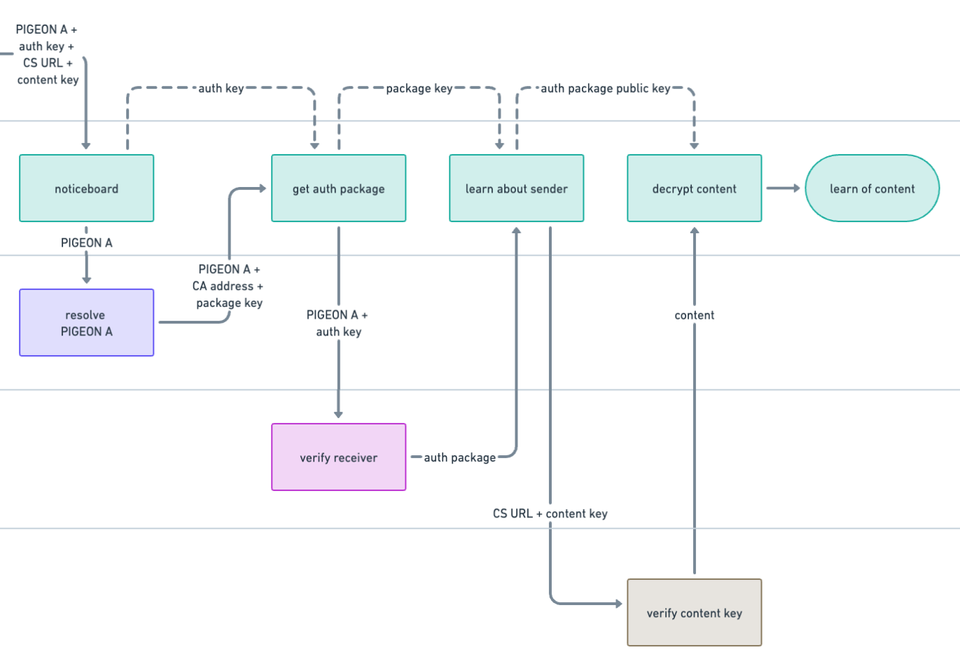

So in order to allow for secure transmission of trustworthy messages what do we have?

- We must allow for full transparency pertaining to the compilation of the message in effect allowing the receiver to reach at the same "conclusion" as the sender did once finished compiling the message.

- We must allow the receiver to question whether the message is exactly as intended by the sender.

- Finally we must cater for the paranoia of the receiver – allow for them to ascertain themselves of the authenticity of the sender.

We've dealt with Number 3 in a previous post.

Number two could be dealt with rather mundanely using a simple hash. The sender will have to keep this hash readily available at all time (they will probably 'outsource' the job to some machine for receivers (machines) to question on a allways-on-schedule).

Number 1 is a totally different animal! Current levels of technology offer many facets of this transparency but in order to be practical in operations these technologies will have to be bundled in novel ways. I'm not able to detail this toolset in proper details but I will disclose a few glimpses as best I can:

Written content may prove the easy one. When starting on a new message (whether a SMS or the next War & Peace) the author will initialize a repository for persisting every small change, and then every few hundred milli seconds the workbench/editor will autosave the deltas (changes) to the document(s) together with occasional biometrics from the author and other environmental variables like what the time in the current time zone is, current air pressure, temperature, more.

I see your next question forming as you read! Is the really necessary? Not for persisting a groceries list certainly not but I guess neither you nor I will be the best judges of that - the receiver will.

I like to think that any sender will lean towards placing as many pieces of verifiable facts as possible in the hands of the receiver when they face that terrible situation: Is this in fact a trustworthy message.

All of this obviously builds on a presumption that the sender/author does indeed compile their message in good will, fact founded, and as a bonus pater familias. I am well aware that such a precondition is not by a long shot the standard operated by many authors – recent years have witnessed political movements like trumpism trumpet (pun intended) its alternate reality facts without remorse and demonstrating a rather dinged morale at best. The model I'm pedaling will none the less operate just at well in any reality. It only takes receivers to acknowledge and trust the sender; and if they get verification that the sender is indeed who they claim to be then by their standards the message will be trustworthy (in their reality).

Any content, any message, clearly will grow in size depending on the number of variables allowed by the sender/author. Battling this issue will add to the exchange protocol between sender and receiver. Receivers may instruct machines to initially ask only of the sender machine the content and then only when instructed they will start asking for meta data.

So far I've applied only the DNS protocol and the HTTPS protocol and retrieving the message/content could easily utilise the HTTPS protocol as well with one caveat: HTTPS is a stateless protocol and in a fully decentralized state where each sender keeps all machines on their own device it may prove a more convoluted endeavour to facilitate the workflow contrary to one where most of the machines are operated out of 'fixed locations'. Allow me to elaborate.

Senders operating out of their device while roving will change network address frequently. That does not prove a problem by itself as dynamic DNS may update the conversion from ATHLETE69 to some IP address in a few milli seconds – but moving between various networks with a host of different firewalls could trouble the questioning, and requests for transmission depending upon the ports allowed inbound to a network; and port 80/443 which are used by HTTP/S are seldomly open from the outside inwards, only inside outwards, or they are directed at specific local area network addresses in a DMZ, because the network has web servers placed here. It's not like undoable only it will require some careful consideration. Optimally all senders would decide for hosting-provider services to complete tasks commanded by the sender's machines but such luxury will severely narrow the total addressable market! One prominent way of overcoming ports not being available, also categorized as networks not being traverseable, is adding something called TURN servers (short for Traversal Using Relays around NAT). This, however, adds considerable system complexity to the overall design. Another design choice would be store/forward where messages are kept in escrow until users' machines are able to collect - much like email operates. Not a flexible choice either. Then there is the appliance model.

From a privacy policy point the appliance model is perfect. From a system design point of view even so. Considering the user experience most certainly too. You could say: it just works - to quote Steve Jobs back in 2011 when introducing iCloud.

When signing up for a pigeon token the user will receive a small appliance perhaps the size of a packet of cigarettes chewing gum that they will attach to their home network.

This obviously will only work if you actually have got an Internet connection at home (some 70% in EU, about 97% in the US, and 60+% globally as of 2023). The appliance will host the data store and other machines like the certificate authority perhaps even if the user may prefer and buy third-party service providers for other reasons. Now the store/forward design suddenly sits a lot more comfortable with me.

Senders may leave notices with the 'user' / receiver with out them even have their device turned on, without any privacy issues coming into play. The user may choose to let machines do the verification and message transmission fully detached from their own device and in timeslots (eg when the user is sleeping) not disturbing the user. Roving with their device the user will 'call home' at intervals or choose to stay connected and operate a TURN server themselves. Options will be available. And, importantly, the aforementioned alternatives still are viable – it's entirely up to the consumer/user and their desire for privacy, security, comfort, ease of application.